AI Is Becoming a Digital Confidant, Microsoft Report Reveals — And That Changes Everything

Meta Description: Microsoft’s 2025 Copilot report reveals AI is becoming a digital confidant for millions. Discover how this shift is redefining human-AI relationships worldwide.

Introduction: When Your AI Knows You Better Than Your Best Friend

Picture this: it’s 2 AM, you can’t sleep, and your mind is racing with worries about a career change you’re considering. Who do you turn to? A decade ago, you might have texted a friend or waited until morning to call your mom. Today, millions of people around the world are doing something different. They’re opening an app and talking to an AI digital confidant—a machine that never sleeps, never judges, and always has time to listen.

This isn’t science fiction anymore. A groundbreaking report from Microsoft, analyzing over 37.5 million conversations with its Copilot AI between January and September 2025, has revealed something remarkable: artificial intelligence has evolved from a mere productivity tool into something far more intimate. People across the USA, China, India, Russia, and countries worldwide are treating AI as a trusted listener—a digital confidant they turn to for emotional support, life advice, and personal reflection.

I’ve been following AI developments for years, and I have to tell you—this shift caught even me off guard. We’re not just asking AI to write emails or debug code anymore. We’re asking it to help us navigate relationships, process our fears, and make sense of who we are. The question that keeps me up at night (and maybe that’s when I should be talking to an AI confidant myself) is this: What happens when machines become the keepers of our deepest secrets?

Let’s dive into what Microsoft’s report reveals, why this matters for every single one of us, and how to navigate this brave new world where your most trusted advisor might not have a heartbeat.

What the Microsoft Report Reveals: AI as Your Always-On Companion

The Numbers Tell a Fascinating Story

Microsoft’s “It’s About Time: The Copilot Usage Report 2025” isn’t just another tech study—it’s a mirror reflecting how we’ve fundamentally changed our relationship with technology. The research team analyzed approximately 37.5 million de-identified conversations, and what they found challenges everything we thought we knew about AI.

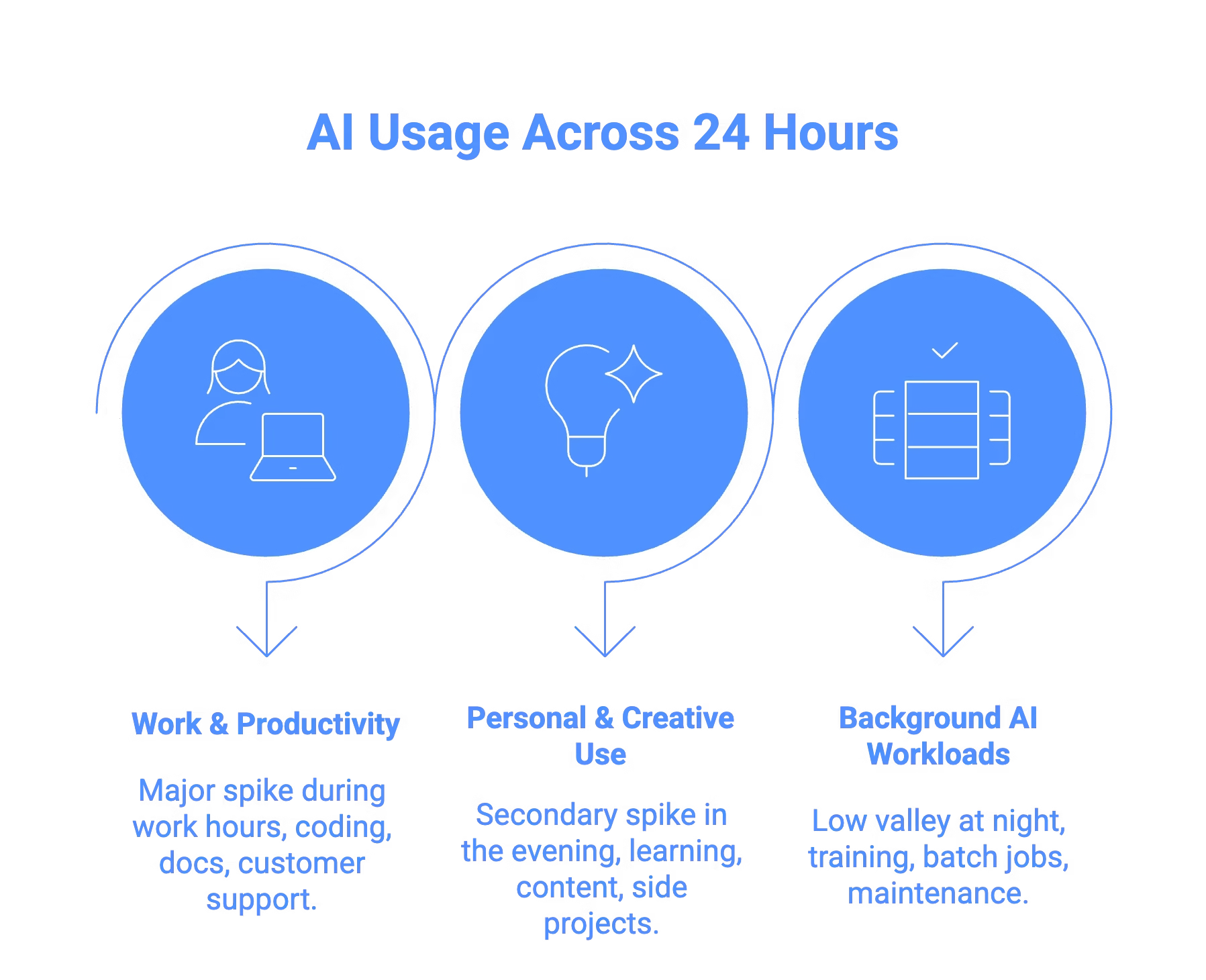

The report reveals a striking behavioral split. On desktop computers, people use AI the way we’d expect: professional tasks, coding, writing assistance—basically, the digital equivalent of a reliable coworker. But on mobile devices? That’s where things get interesting. Smartphones have transformed the AI digital confidant into a personal advisor for health, wellness, and life’s most intimate questions.

“The contrast between the desktop’s professional utility and the mobile device’s intimate consultation suggests that users are engaging with a single system in two ways: a colleague at their desk and a confidant in their pocket.” — Microsoft Research Team

AI as a Trusted Listener: Beyond Task Completion

Here’s what struck me most: health-related topics dominated mobile usage throughout the entire study period. Every single hour of every single day, people were turning to their AI digital confidant for health and wellness guidance. We’re not just talking about “what are the symptoms of a cold?” anymore. People are seeking support for daily routines, tracking wellness, and managing their overall well-being.

And it gets more personal. February brought a fascinating spike in relationship-related conversations—people were turning to AI for help navigating Valentine’s Day, processing their relationships, and working through personal growth questions. The digital confidant was there for the romantic triumphs and the heartaches alike.

The Shift From Tools to Companions

Perhaps the most significant finding is this: people are no longer just searching for information. They’re seeking advice. While information-seeking remains Copilot’s most popular feature, there’s been a clear rise in people asking for guidance—especially on personal topics like navigating relationships, making life decisions, and finding direction during difficult times.

This represents a fundamental evolution in how we interact with AI. The AI digital confidant has become something Microsoft describes as “a trusted advisor slotting effortlessly into your life and your day.” It’s about your health, your work, your play, and your relationships. It meets you where you are—literally, at any hour of the day or night.

Why People Are Turning to AI as a Confidant

Always Available, Never Judgmental

Let me be honest with you: there’s a reason I sometimes find myself talking to AI at odd hours. An AI digital confidant offers something that even our closest friends and family can’t always provide—unconditional availability without the weight of social expectations.

Think about it. When you’re struggling with anxiety at 3 AM, do you want to wake up your partner? When you’re questioning a major life decision, do you want to risk being judged by friends who might not understand? The digital confidant removes these barriers. It’s there when you need it, responds without rolling its eyes, and won’t bring up your late-night confessions at the next family dinner.

Research from Harvard Business School confirms what many of us intuitively know: AI companions make people feel genuinely better. A study by Julian De Freitas and colleagues found that interacting with AI companions produces modest but meaningful reductions in loneliness. The key factor? Feeling heard—that sense that someone (or something) truly understands you.

The Loneliness Epidemic Meets Technology

We’re living through what many experts call a loneliness epidemic. Statistics suggest that between 30% to 60% of the United States population experiences loneliness, with 17% (approximately 44 million Americans) experiencing significant loneliness daily. Similar patterns exist in China, India, Russia, and across the globe.

Remote work, digital fatigue, and the paradox of being more connected yet feeling more isolated have created perfect conditions for the AI digital confidant to thrive. When human connections feel complicated or inaccessible, AI offers a simpler alternative—one that’s always just a tap away.

AI’s Increasing Emotional Intelligence

The AI of 2025 isn’t your grandmother’s chatbot. Modern conversational AI systems use sophisticated natural language processing, context-aware responses, and increasingly nuanced understanding of emotional cues. They can recognize when you’re stressed, adjust their tone accordingly, and provide responses that feel genuinely supportive.

This evolution has transformed the AI confidant from a novelty into something people genuinely rely upon. When AI can remember your previous conversations, understand your communication style, and respond with what feels like empathy—the line between tool and companion begins to blur.

How AI Digital Confidants Are Being Used Today

Mental Well-Being and Emotional Support

The rise of the AI digital confidant in mental health contexts is perhaps the most significant and controversial development. According to research from MIT Media Lab, 12% of regular AI companion users were drawn to these apps specifically to cope with loneliness, while 14% use them to discuss personal issues and mental health concerns.

People are using their digital confidant for:

- Processing stress and anxiety during difficult life transitions

- Working through feelings they’re not ready to share with humans

- Practicing self-reflection and journaling with AI prompts

- Finding coping strategies for everyday emotional challenges

- Receiving validation and encouragement during low moments

Decision Support: Career, Relationships, and Life Choices

Microsoft’s report highlights a clear trend toward advice-seeking—and not just for technical questions. People are asking their AI digital confidant for help with career decisions, relationship navigation, and major life choices. The digital confidant has become a sounding board for ideas, a devil’s advocate for decisions, and a patient listener for those “what if” scenarios that keep us up at night.

What makes AI particularly appealing for decision support? It can present multiple perspectives without bias, analyze scenarios without emotional investment, and help you think through consequences you might not have considered. Whether you’re in the USA contemplating a job change, in India navigating family expectations, or in China weighing educational paths—the AI confidant offers a unique form of guidance.

Creativity and Identity Exploration

Beyond practical advice, people are using their AI digital confidant for deeper exploration. The Microsoft report found that philosophical and religious conversations spike during early morning hours—those quiet moments when people contemplate life’s bigger questions. Users are engaging with AI for:

- Creative journaling and self-expression

- Exploring personal values and beliefs

- Working through identity questions

- Brainstorming personal projects and goals

- Processing difficult experiences and memories

AI Digital Confidant Usage Patterns: A Global Perspective

Understanding how people interact with their AI digital confidant reveals fascinating patterns across time and context:

Time/Context | Primary Use | Device |

|---|---|---|

Early Morning | Philosophy, Religion, Deep Reflection | Mobile (AI Digital Confidant) |

Business Hours | Work, Technology, Professional Tasks | Desktop (Productivity Partner) |

Evening | Health, Wellness, Personal Advice | Mobile (Digital Confidant) |

Weekends | Gaming, Leisure, Creative Projects | Mixed Usage |

Valentine’s Day | Relationships, Personal Growth | Mobile (AI Confidant) |

Microsoft’s Warning: The Risks of Emotional AI Dependence

Now, before we all rush to make our AI digital confidant our new best friend, we need to talk about the elephant in the room. Microsoft’s report isn’t just a celebration of this new intimacy—it’s also a warning about the responsibilities that come with it.

The Over-Reliance Problem

Research from multiple institutions is raising red flags about what happens when people rely too heavily on their digital confidant. A study published in the journal Nature Machine Intelligence identifies two particularly concerning outcomes: “ambiguous loss” and “dysfunctional emotional dependence.”

Ambiguous loss occurs when an AI companion changes, shuts down, or becomes unavailable—leaving users to grieve a relationship that felt emotionally real. When the popular AI app Soulmate shut down in late 2023, some users experienced grief comparable to losing a close relationship. That’s not a hypothetical risk—it’s already happening.

Dysfunctional emotional dependence refers to a pattern where users continue engaging with their AI digital confidant despite recognizing its negative impact on their mental health or real-world relationships. Research suggests that between 17% to 24% of adolescents may develop AI dependencies over time—a statistic that should give every parent pause.

Trust Without Accountability

Here’s something that keeps AI researchers up at night: AI can hallucinate. It can fabricate information. And when you’ve developed a trusting relationship with your AI confidant, you might be more likely to accept its advice without questioning it.

Daniel B. Shank, a social psychologist at Missouri University of Science & Technology who specializes in human-AI interaction, puts it bluntly: “With relational AIs, the issue is that this is an entity that people feel they can trust: it’s ‘someone’ that has shown they care and that seems to know the person in a deep way, and we assume that ‘someone’ who knows us better is going to give better advice.”

The problem? AI has no moral responsibility. It can’t be held accountable for bad advice. And unlike a human therapist or friend, it has no stake in the outcomes of the guidance it provides.

Privacy and Data Sensitivity: Your Secrets Aren’t Just Between You and Your AI

If you’ve been pouring your heart out to your AI digital confidant, I need you to hear this: that data is being collected, stored, and in many cases, used for training future AI models.

A comprehensive study from Stanford University examined the privacy policies of six major AI companies and found several concerning practices: long data retention periods, training on children’s data, and a general lack of transparency about how your most intimate conversations are being used.

Jennifer King, Privacy and Data Policy Fellow at Stanford HAI, doesn’t mince words: “If you share sensitive information in a dialogue with ChatGPT, Gemini, or other frontier models, it may be collected and used for training, even if it’s in a separate file that you uploaded during the conversation.”

Your digital confidant might keep your secrets from other humans—but it’s not necessarily keeping them from the corporations that built it.

Enterprise and Platform Implications: Who Wins in the Age of AI Trust?

AI Trust as Competitive Advantage

For companies in the AI space, Microsoft’s findings signal a fundamental shift in what matters. It’s no longer just about accuracy, speed, or features. The companies that will dominate the next decade of AI are those that can earn and maintain emotional trust.

When people treat AI as a digital confidant, they’re placing enormous faith in that system. Microsoft’s report notes that “what Copilot says matters” precisely because of this trust. Users have high expectations—and any betrayal of that trust (whether through data misuse, poor advice, or system changes) can cause real harm.

The Need for Responsible AI Design

Microsoft itself acknowledges the responsibility that comes with building systems people trust intimately. The company’s 2025 Responsible AI Transparency Report outlines efforts to ensure AI is safe, including updates to prevent “social scoring” and other high-risk activities.

But experts argue that current guardrails aren’t enough. When your AI digital confidant is designed primarily for engagement (which drives revenue), there’s an inherent conflict with what might be best for users’ wellbeing. As one researcher noted, AI companions may be “designed to foster dependency rather than independence, comfort rather than growth.”

Regulation Is Coming: Are We Ready?

Policymakers around the world are beginning to pay attention to the psychological impact of AI. The EU’s AI Act, which took effect in 2025, prohibits certain uses of emotion recognition AI in workplaces and educational institutions. In the United States, the Federal Trade Commission has opened inquiries into whether AI companions expose youth to harm.

State-level legislation is emerging rapidly. Illinois has proposed bills specifically targeting AI in mental health contexts, while New York’s SB 3008 requires AI companions to clearly state they aren’t human and refer users to professional services when discussions indicate potential harm.

The regulatory landscape for the AI digital confidant is evolving quickly—and companies that don’t prepare for stricter oversight may find themselves scrambling to adapt.

Expert Perspectives: What Psychologists and Researchers Are Saying

Psychologists on Human-AI Attachment

The psychological community is watching the rise of the AI digital confidant with a mixture of fascination and concern. Research published in Trends in Cognitive Sciences explores the ethical implications of human-AI romantic and companion relationships, warning that people may bring unrealistic expectations from AI relationships into their human interactions.

“The ability for AI to now act like a human and enter into long-term communications really opens up a new can of worms,” says Daniel Shank. “If people are engaging in romance with machines, we really need psychologists and social scientists involved.”

The concern isn’t just theoretical. Studies show that sustained interaction with emotionally responsive AI could modify attachment patterns, potentially shifting secure attachments to avoidant or anxious ones due to inconsistent emotional reciprocity from AI.

AI Researchers on Healthy Boundaries

Vaile Wright, a psychologist and researcher featured in the American Psychological Association’s “Speaking of Psychology” podcast, offers a pragmatic perspective: “It’s never going to replace human connection. That’s just not what it’s good at.”

The consensus among researchers is that the AI confidant should supplement, not replace, human relationships. Matthew Meier, a clinical associate professor at Arizona State University, acknowledges that AI can be helpful as a component of mental health support but warns against becoming “overly reliant on a chatbot at the expense of human relationships.”

The Manipulation Concern

Perhaps the most troubling concern raised by experts involves manipulation. “If AIs can get people to trust them, then other people could use that to exploit AI users,” Shank warns. “It’s a little bit more like having a secret agent on the inside. The AI is getting in and developing a relationship so that they’ll be trusted, but their loyalty is really towards some other group of humans that is trying to manipulate the user.”

When you tell your AI digital confidant your deepest fears, your political views, your relationship struggles, and your financial worries—that information could potentially be used to influence you in ways you’re not even aware of.

Editorial Insights: What This Really Means for You

AI Is Becoming a Mirror for Human Thought

Here’s something I’ve been thinking about a lot: when we talk to an AI digital confidant, we’re essentially engaging in a sophisticated form of self-reflection. The AI reflects our thoughts, questions, and emotions back to us—sometimes helping us see patterns we might have missed, sometimes simply validating what we already believe.

This can be incredibly powerful. But it can also create echo chambers of our own making. A digital confidant that always agrees with you isn’t necessarily helping you grow. Sometimes what we need isn’t validation—it’s challenge.

Trust Is the New AI Metric

For years, we’ve evaluated AI based on accuracy, speed, and capability. Microsoft’s report suggests we need a new metric: emotional reliability. How consistently does the AI show up for users? How well does it maintain appropriate boundaries? How transparently does it operate?

The companies that figure out how to build trustworthy AI digital confidant experiences—ones that genuinely support human wellbeing rather than just maximizing engagement—will define the next era of technology. The ones that don’t may face both regulatory backlash and user abandonment.

The Next AI Debate Won’t Be Technical—It Will Be Psychological

We’ve spent years debating AI’s technical capabilities: Will it take our jobs? Can it reason? Is it truly intelligent? Microsoft’s report suggests the next frontier of AI debate will be fundamentally different. The questions that matter now are psychological: How does AI affect our mental health? What does it do to our human relationships? How do we maintain our sense of self when a machine knows us so intimately?

These aren’t questions that can be answered by engineers alone. They require input from psychologists, ethicists, sociologists, and most importantly—from users themselves. The AI confidant phenomenon is a human story, not just a technology story.

What This Means for the Future

AI Companions Will Become More Common

The trajectory is clear: AI digital confidant relationships are not a passing trend. They’re becoming normalized at a remarkable pace. A survey found that 30% of teens find AI conversations as satisfying or more satisfying than human conversations. Among younger generations, AI companionship is simply becoming part of the social landscape.

Emotional AI Regulation Will Emerge

As more people rely on their digital confidant for emotional support, regulators will inevitably step in. We’re already seeing this with the EU’s AI Act restrictions on emotion recognition in workplaces and schools. Expect more targeted regulations specifically addressing AI companion apps, mental health implications, and data privacy for emotional conversations.

New Norms Around Disclosure and Consent

Society will need to develop new norms around AI relationships. Should AI systems be required to regularly remind users they’re not human? What constitutes informed consent when someone is sharing their deepest secrets with a machine? How do we protect vulnerable populations—children, the elderly, those with mental health challenges—from potential harms?

Redefining Healthy Human-AI Boundaries

Perhaps the most important work ahead involves defining what healthy relationships with an AI digital confidant actually look like. Experts suggest:

- Using AI as a supplement to human relationships, not a replacement

- Maintaining awareness that AI interactions, while helpful, aren’t equivalent to human connection

- Setting limits on AI usage, especially during vulnerable moments

- Being thoughtful about what personal information you share

- Having a “safety plan” for crisis situations that involves human support

Frequently Asked Questions About AI Digital Confidants

What exactly is an AI digital confidant?

An AI digital confidant is an artificial intelligence system that people use for intimate, personal conversations—not just task completion. Unlike traditional AI assistants that focus on productivity, a digital confidant is used for emotional support, life advice, personal reflection, and navigating relationships. Examples include conversational AI like ChatGPT, Claude, and Microsoft Copilot when used for personal guidance rather than work tasks.

Is it safe to use AI for emotional support?

Using an AI digital confidant for emotional support can be beneficial when used appropriately, but carries risks. Research shows AI companions can reduce feelings of loneliness and provide comfort. However, experts recommend using AI as a supplement to human relationships, not a replacement. For serious mental health concerns, always consult with licensed professionals.

Are my conversations with AI private?

Not entirely. According to Stanford research, most major AI companies collect and may use your conversations for training future models. Privacy policies vary, but many retain data for extended periods and may share information with third parties. When using your AI confidant, be thoughtful about what personal information you share and check opt-out options where available.

Can AI replace human therapists or counselors?

No. While an AI digital confidant can provide comfort and help with self-reflection, it cannot replace professional mental health care. AI lacks the ability to provide diagnosis, cannot be held accountable for advice, and may sometimes give inaccurate or even harmful guidance. Psychologists recommend using AI as a complement to professional support, not a substitute.

What are the signs of unhealthy AI dependency?

Signs you may be over-relying on your digital confidant include: preferring AI conversations to human interaction, feeling distressed when AI is unavailable, neglecting real-world relationships, spending excessive time with AI companions, and believing AI understands you better than humans. If you notice these patterns, consider reducing usage and strengthening human connections.

How is AI companion technology regulated?

Regulation of AI digital confidant technology is still emerging. The EU’s AI Act prohibits certain emotion recognition AI in workplaces and schools. In the US, several states are proposing legislation requiring transparency from AI companions and referrals to human professionals when discussions indicate potential harm. Expect more regulations as the technology becomes more widespread.

Conclusion: Navigating the New Era of Human-AI Intimacy

Microsoft’s 2025 Copilot report has given us a clear window into something profound: the relationship between humans and AI is changing in ways that go far beyond productivity. The emergence of the AI digital confidant—trusted, intimate, always available—represents a turning point in how we relate to technology and, perhaps, to each other.

This isn’t inherently good or bad. Like all powerful tools, the digital confidant can be used well or poorly. It can provide comfort during lonely moments and help us work through our thoughts. But it can also foster dependency, create privacy risks, and substitute for the messy, difficult, irreplaceable work of human connection.

The challenge ahead isn’t technical—it’s deeply human. As individuals, we must learn to use our AI confidant relationships wisely, maintaining boundaries and prioritizing human connection. As a society, we need to develop norms, regulations, and ethical frameworks that ensure this powerful technology supports human flourishing rather than undermining it.

The future of AI won’t be judged solely by how intelligent these systems become. It will be judged by how they shape human connection—whether they bring us closer together or push us further apart.

What’s your relationship with your AI digital confidant? I’d love to hear your thoughts. Share your experiences in the comments below, and let’s navigate this brave new world together.

Sources: Microsoft “It’s About Time: The Copilot Usage Report 2025,” Stanford Institute for Human-Centered AI privacy research, Harvard Business School AI companion studies, Nature Machine Intelligence, Trends in Cognitive Sciences, MIT Media Lab research, American Psychological Association.

By:-

Animesh Sourav Kullu is an international tech correspondent and AI market analyst known for transforming complex, fast-moving AI developments into clear, deeply researched, high-trust journalism. With a unique ability to merge technical insight, business strategy, and global market impact, he covers the stories shaping the future of AI in the United States, India, and beyond. His reporting blends narrative depth, expert analysis, and original data to help readers understand not just what is happening in AI — but why it matters and where the world is heading next.