AI Progress and Physics: Why Bigger Models Alone Won't Deliver the Next Breakthrough

Discover why AI progress and physics are deeply connected. Learn how physical limits—not just parameters—will shape the future of artificial intelligence.

Introduction — The Uncomfortable Truth About AI’s Future

Here’s a story the tech industry doesn’t love telling.

For the past decade, AI progress and physics seemed unrelated. The formula was simple: throw more data at bigger models, add computing power, and watch the magic happen. GPT-2 had 1.5 billion parameters. GPT-3 jumped to 175 billion. GPT-4? We don’t know exactly, but estimates suggest over a trillion.

But here’s the thing. I’ve been watching this space closely. And something is shifting.

The breathless announcements are getting quieter. The benchmark improvements are getting smaller. And researchers are starting to whisper about something uncomfortable: AI progress and physics are on a collision course.

Can artificial intelligence keep advancing if the laws of physics become the bottleneck? That’s the central question reshaping how we think about the future of AI. And honestly? The answer might surprise you.

The Scaling Era: How We Got Here

The Rise of Parameter-Driven AI

Let’s rewind. The relationship between AI progress and physics wasn’t always obvious because scaling seemed to work so effortlessly.

Remember when a million parameters felt ambitious? That was 2012. By 2020, we hit hundreds of billions. Today, frontier models potentially contain trillions of parameters.

This wasn’t just growth—it was exponential explosion.

| Year | Model | Parameters |

|---|---|---|

| 2018 | GPT-1 | 117 million |

| 2019 | GPT-2 | 1.5 billion |

| 2020 | GPT-3 | 175 billion |

| 2023 | GPT-4 | ~1 trillion (estimated) |

The strategy seemed bulletproof. More parameters meant more capability. Simple.

Why Scaling Worked So Well

Understanding AI progress and physics requires understanding why scaling delivered such remarkable gains.

Larger models exhibited “emergent abilities”—capabilities that appeared suddenly at certain scales. They could reason. They could code. They could write poetry that occasionally made you feel something.

The gains were predictable enough that companies bet billions on this approach. If doubling compute improved performance by X percent, then you just… kept doubling.

Signs the Scaling Curve Is Flattening

But here’s where AI progress and physics starts getting interesting.

The returns are diminishing. Training costs have skyrocketed—we’re talking hundreds of millions of dollars for a single model run. And the improvements? They’re getting harder to measure.

This isn’t just an economic problem. It’s a physics problem wearing an economic disguise.

The Physics Problem: Limits AI Can’t Ignore

Energy Consumption

When we discuss AI progress and physics, energy is where theory meets brutal reality.

Training GPT-4 reportedly consumed enough electricity to power thousands of homes for a year. The next generation will demand even more. Some estimates suggest AI could consume 3-4% of global electricity by 2030.

You can’t ignore thermodynamics. Every computation generates heat. Every calculation requires power. The connection between AI progress and physics isn’t abstract—it shows up on electricity bills.

Heat Dissipation & Chip Limits

Here’s something most people don’t realize about AI progress and physics: chips have physical limits.

Moore’s Law—the observation that transistor density doubles roughly every two years—is slowing dramatically. We’re approaching atomic scales where quantum effects create unpredictable behavior. Heat dissipation becomes nearly impossible at certain densities.

Modern AI chips already run hot enough to fry eggs. That’s not a joke. Thermal management has become a critical engineering challenge.

Speed of Light & Data Movement

Perhaps the most fundamental constraint linking AI progress and physics is one we learned in high school: nothing travels faster than light.

Inside data centers, information must move between processors, memory, and storage. Those distances create latency. That latency creates bottlenecks. And no amount of money can make electrons travel faster.

The physics of data movement increasingly determines what’s computationally possible.

Why Trillions of Parameters May Not Be Enough

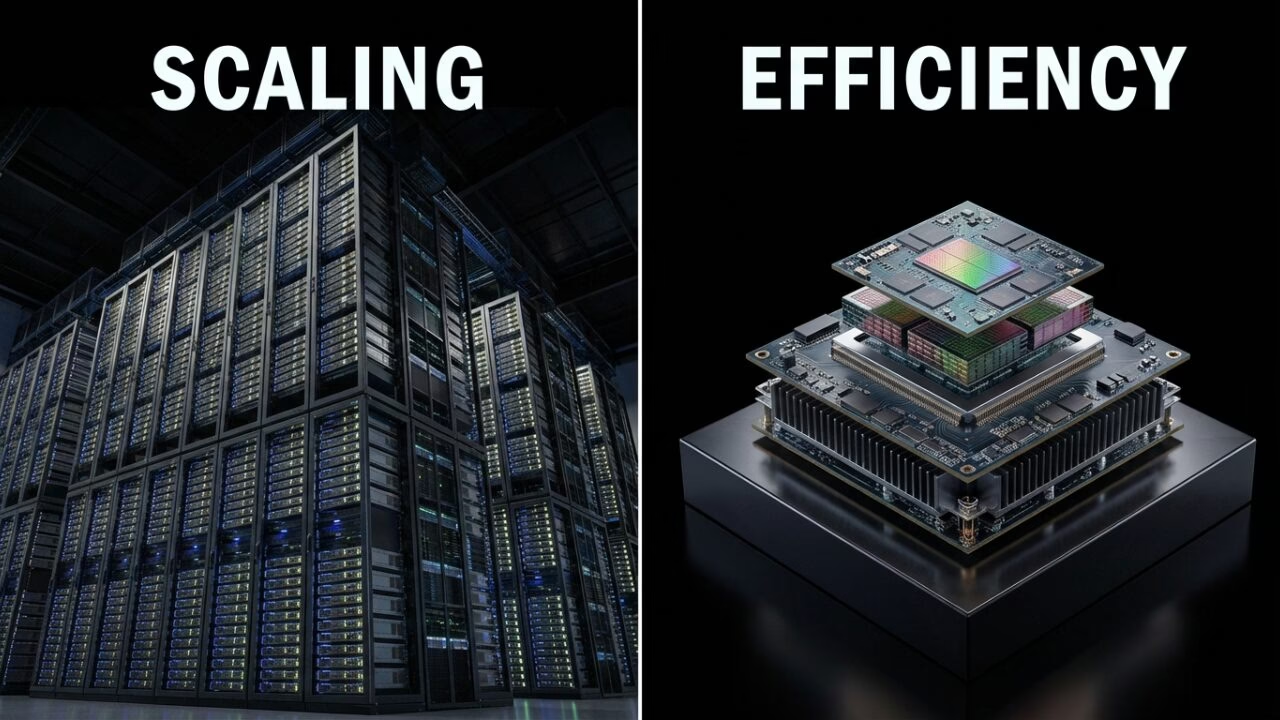

Compute Scaling vs Real-World Constraints

The relationship between AI progress and physics becomes clearer when you examine hardware limitations.

GPUs and TPUs—the workhorses of AI training—face fabrication constraints. The most advanced chips require factories costing $20 billion or more. Only a handful of companies worldwide can produce them.

You can’t scale what you can’t manufacture.

Economic Limits of Scaling

Let’s talk money. Because AI progress and physics isn’t just about electrons—it’s about economics too.

Training frontier models now costs somewhere between $100 million and $1 billion. The next generation might cost $10 billion or more. Only a handful of organizations can afford this.

| Cost Factor | Current Frontier | Projected Next-Gen |

|---|---|---|

| Training Cost | $100M-$500M | $1B-$10B |

| Hardware | $1B+ | $5B+ |

| Energy | $10M+ | $50M+ |

This isn’t sustainable. And sustainability matters for AI progress and physics as much as raw capability.

The Risk of Centralized AI Progress

When only five companies can afford frontier AI development, innovation suffers. Diverse approaches get abandoned. Alternative paths remain unexplored.

The economics of AI progress and physics constraints mean we might be missing breakthroughs because they don’t fit the “scale everything” paradigm.

What Comes Next: AI Beyond Brute Force Scaling

Algorithmic Efficiency

Here’s where AI progress and physics points toward something hopeful.

Researchers are developing smaller, smarter models that achieve comparable results with fraction of the compute. Techniques like:

- Pruning: Removing unnecessary neural connections

- Distillation: Training small models to mimic larger ones

- Mixture of Experts: Activating only relevant model parts

These approaches respect physical constraints rather than fighting them.

Physics-Aware AI Architectures

The future of AI progress and physics might involve architectures designed around physical principles rather than despite them.

Neuromorphic computing mimics biological brains, which accomplish remarkable feats using roughly 20 watts of power. Your laptop uses more energy than your brain.

Photonic computing uses light instead of electrons, potentially enabling faster, cooler computation.

Analog computing trades digital precision for energy efficiency in applications where approximation works.

Hybrid Systems

Another frontier in AI progress and physics involves combining approaches:

- Symbolic reasoning paired with neural networks

- Specialized models for specific domains

- Cascading systems that route queries efficiently

These hybrid approaches acknowledge that no single architecture solves everything.

The Role of Hardware in Future AI Progress

New Chip Designs

Hardware innovation is critical to AI progress and physics evolution.

Companies are designing:

- Energy-efficient accelerators optimized for specific AI workloads

- Domain-specific chips that excel at particular tasks

- 3D chip architectures that reduce data movement distances

These innovations respect physical constraints rather than ignoring them.

Quantum Computing (Long-Term)

Let’s be honest about quantum’s role in AI progress and physics: it’s complicated.

Quantum computers excel at specific problem types. They’re not general-purpose replacements for classical computing. For certain AI applications—optimization, sampling, simulation—they might eventually help.

But expectations should remain cautious. We’re years, possibly decades, from practical AI applications.

Edge AI & Distributed Intelligence

A fascinating development in AI progress and physics involves moving computation closer to data sources.

Edge AI runs models on devices—phones, cars, sensors—rather than centralized data centers. This reduces:

- Network latency

- Energy transmission losses

- Centralized infrastructure pressure

Distributed intelligence might sidestep some physical constraints by avoiding them entirely.

Industry Implications

Big Tech’s Strategic Shift

Major technology companies are recognizing that AI progress and physics constraints require strategic adaptation.

The focus is shifting from “build bigger” to “build smarter.” Efficiency metrics are becoming competitive advantages. Sustainability claims are appearing in marketing materials.

This isn’t altruism—it’s physics forcing economic adaptation.

Startups and Open Research

The physics constraints limiting AI progress and physics through scale create opportunities for smaller players.

Startups pursuing efficiency-focused approaches can compete where they couldn’t before. Open research communities can contribute innovations that don’t require billion-dollar budgets.

The physics bottleneck might actually democratize AI progress, ironically.

Governments and Policy

AI progress and physics intersections have policy implications that governments are beginning to recognize:

- Energy infrastructure requirements for AI development

- Semiconductor manufacturing as strategic priority

- Environmental regulations affecting data center operations

- Research funding for alternative approaches

The physics of AI is becoming a matter of national strategy.

Editorial Insight: What This Really Means

AI’s Next Leap Will Be Engineering, Not Just Data

Here’s my take on AI progress and physics: the next breakthrough won’t come from adding zeros to parameter counts.

It’ll come from clever engineering. From respecting constraints. From building systems that accomplish more with less.

The researchers who understand physics will outcompete those who only understand scaling.

Physics Forces AI to Become Smarter, Not Just Bigger

There’s something almost poetic about AI progress and physics colliding.

Constraints drive creativity. Limitations inspire innovation. When you can’t simply throw more compute at problems, you must actually solve them.

The physics bottleneck might be the best thing to happen to AI development.

The End of “Scale at Any Cost”

The era of AI progress and physics ignorance is ending.

Sustainable AI is becoming a competitive advantage, not a marketing afterthought. Companies that optimize for efficiency will outcompete those burning money on diminishing returns.

The market is starting to reward physics-aware approaches.

What This Means for the AI Timeline

Understanding AI progress and physics constraints changes timeline expectations.

Expect:

- Slower headline-grabbing announcements

- More meaningful, practical improvements

- Shift from hype cycles to engineering cycles

- Incremental advances that compound over time

Don’t Expect:

- Exponential capability gains continuing indefinitely

- AGI arriving simply through scale

- Physics constraints magically disappearing

The timeline isn’t about when we hit some magic parameter count. It’s about when we solve the underlying engineering challenges that AI progress and physics presents.

Conclusion — The Big Picture

Let me leave you with this.

AI progress and physics are more connected than most people realize. For years, we pretended otherwise. We scaled models, burned energy, and celebrated benchmarks.

But physics doesn’t negotiate. It doesn’t care about investor presentations or press releases.

The laws of thermodynamics apply to neural networks the same way they apply to steam engines. Energy constraints are real. Heat dissipation matters. The speed of light isn’t increasing anytime soon.

Here’s what I believe about AI progress and physics going forward:

The future won’t belong to whoever builds the biggest model. It’ll belong to whoever builds the smartest systems within physical constraints. To engineers who respect limitations. To researchers who find elegant solutions rather than brute force approaches.

The next AI revolution won’t come from simply adding parameters. It’ll come from efficiency, physics-aware design, and genuine creativity.

And honestly? That’s more exciting than another announcement about trillions of parameters.

The relationship between AI progress and physics isn’t a limitation story. It’s an innovation story waiting to unfold.

What do you think? Are we entering a new phase of AI development? Share your thoughts below. And if you found this analysis valuable, share it with someone who’s curious about where AI is actually heading—beyond the hype.

Stay curious. Stay skeptical. And remember: the universe doesn’t care about our scaling curves.

By:-

Animesh Sourav Kullu is an international tech correspondent and AI market analyst known for transforming complex, fast-moving AI developments into clear, deeply researched, high-trust journalism. With a unique ability to merge technical insight, business strategy, and global market impact, he covers the stories shaping the future of AI in the United States, India, and beyond. His reporting blends narrative depth, expert analysis, and original data to help readers understand not just what is happening in AI — but why it matters and where the world is heading next.