Nvidia Groq Chips Deal Signals a Major Shift in the AI Compute Power Balance

Meta Description: Breaking: The Nvidia Groq chips deal reshapes AI hardware. Discover why this partnership matters for compute infrastructure and what it means for 2026.

Introduction: Why the Nvidia Groq Chips Deal Changes Everything

Something big just happened in the world of AI hardware. And honestly? It caught a lot of industry watchers off guard. The Nvidia Groq chips deal—first reported by The New York Times—represents one of the most significant partnerships we’ve seen in the artificial intelligence infrastructure space this year. This isn’t just another business announcement. This is a signal.

For years, Nvidia has dominated the AI chip market with an iron grip. Their GPUs power everything from ChatGPT to autonomous vehicles. So when news broke about this partnership, people started asking questions. Why would the undisputed king of AI hardware partner with a startup known for building chips that work differently than GPUs? What does Nvidia know that we don’t?

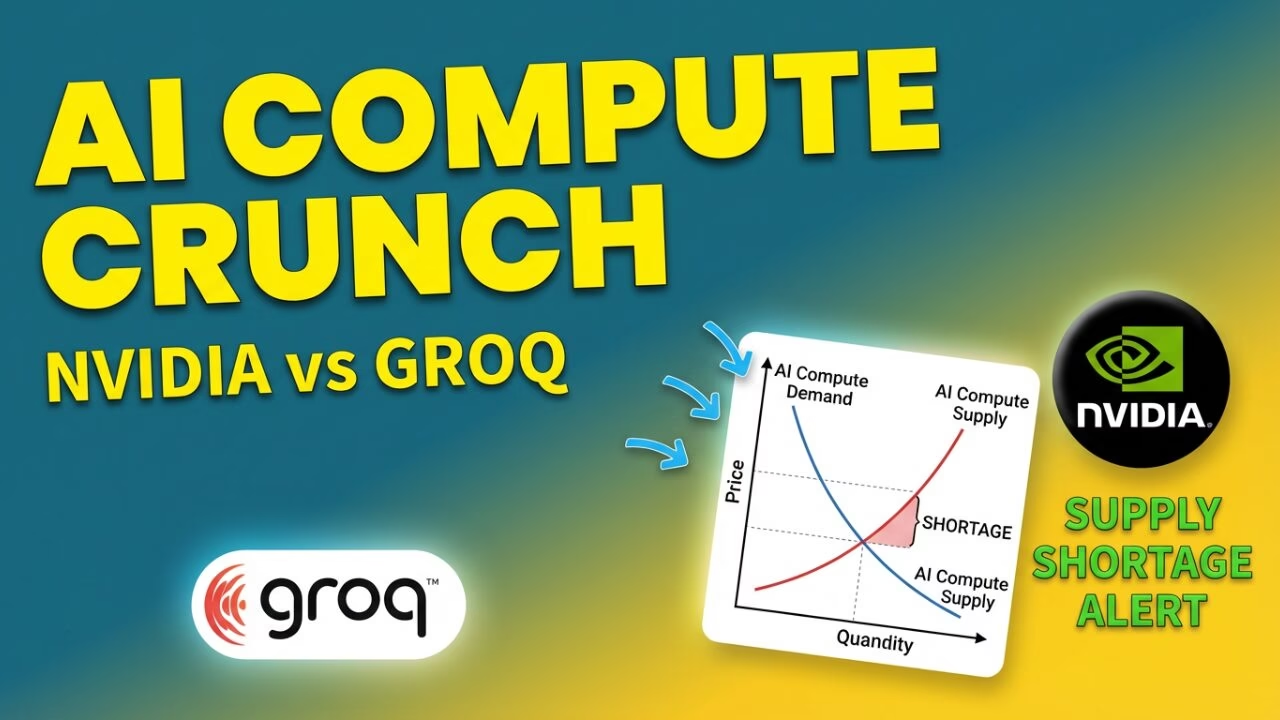

Here’s the thing. The Nvidia Groq chips deal isn’t a sign of weakness. It’s actually the opposite. AI demand has grown so explosively that even Nvidia—with all its manufacturing might—can’t keep up alone. This partnership reflects a maturing market where compute supply has become the critical bottleneck, not model development.

In this comprehensive analysis, I’m going to break down exactly what the Nvidia Groq chips deal involves, why it matters for the future of AI, and what it means for everyone from cloud providers to enterprise customers. The Nvidia Groq chips deal might just be the first domino in a complete reshaping of AI infrastructure. Let’s dive in.

What the Nvidia Groq Chips Deal Actually Involves

The Agreement: What We Know So Far

Let’s cut through the noise and look at what this deal actually entails. According to reporting, Nvidia has entered into an arrangement to utilize chips manufactured by Groq for specific AI workloads. The Nvidia Groq chips deal appears focused on inference tasks—the process of running trained AI models rather than training them from scratch.

The exact scope hasn’t been fully disclosed. However, industry analysts believe it involves significant volume commitments and possibly integration into Nvidia’s broader AI platform offerings. The timeline suggests deployment could begin within the next 12-18 months, positioning both companies for the anticipated AI infrastructure surge of 2026.

What makes the Nvidia Groq chips deal particularly interesting is the nature of the workloads involved. Rather than competing with Nvidia’s flagship H100 and upcoming Blackwell GPUs for training, this arrangement focuses on complementary use cases where Groq’s architecture excels.

What Makes Groq’s Chips Different from Nvidia GPUs?

To understand why this partnership makes strategic sense, you need to understand what makes Groq special. Groq builds something called a Language Processing Unit (LPU)—and it works fundamentally differently than a GPU. The Nvidia Groq chips deal essentially acknowledges that different AI tasks need different hardware approaches.

Key Differences Between Groq LPUs and Nvidia GPUs:

- Architecture: Groq uses a deterministic, software-defined approach versus Nvidia’s parallel processing design

- Speed: Groq chips deliver exceptionally fast inference with predictable latency

- Use Case: Optimized for running AI models, not training them

- Efficiency: Superior performance-per-watt for specific workloads

- Predictability: Consistent, deterministic performance rather than variable throughput

This partnership recognizes that the AI compute landscape is fragmenting. One size no longer fits all. The arrangement positions both companies to serve a market that increasingly demands specialized solutions.

Why Would Nvidia Partner with Groq? Strategic Analysis

AI Demand Is Outpacing GPU Supply

The simplest explanation for this partnership? Nvidia literally cannot make enough chips. Despite ramping production aggressively, demand for AI compute continues to outstrip supply. Hyperscalers like Microsoft, Google, and Amazon are competing fiercely for every available GPU. Enterprise customers face wait times measured in months, sometimes years.

The Nvidia Groq chips deal allows Nvidia to offer customers more options without admitting defeat. By incorporating Groq’s inference-optimized chips into its ecosystem, Nvidia can address workloads that don’t necessarily need GPU-class training capabilities. This arrangement is about expanding the pie, not slicing it differently.

Diversifying AI Compute Without Losing Control

Here’s where the arrangement gets really clever. Nvidia isn’t just a hardware company anymore—it’s become the platform orchestrator for AI computing. CUDA, their software platform, runs on virtually every AI workload globally. This deal allows third-party chips to plug into this ecosystem rather than compete against it.

Think about it. If you’re Groq, would you rather spend billions fighting Nvidia head-on? Or would you accept a partnership where your chips fill a specific niche within Nvidia’s dominant platform? The Nvidia Groq chips deal represents a win-win scenario where both companies strengthen their positions.

Strategic Optionality and Hedging Risk

This partnership also provides Nvidia with strategic insurance. Supply chain disruptions, geopolitical tensions, and manufacturing constraints could all impact Nvidia’s ability to deliver. The arrangement creates redundancy—if GPU supply tightens further, Groq’s chips can absorb some inference demand.

Additionally, the Nvidia Groq chips deal keeps a potential competitor inside Nvidia’s tent. Better to have Groq as a partner than as an enemy rallying other chip startups against the GPU giant. This partnership neutralizes a threat while capturing its value.

What the Nvidia Groq Chips Deal Means for the AI Chip Market

The Rise of Specialized AI Accelerators

The Nvidia Groq chips deal signals a fundamental shift in how the industry thinks about AI hardware. For years, the assumption was that GPUs would handle everything. Training? GPU. Inference? GPU. Edge AI? Smaller GPU. This partnership challenges that orthodoxy.

We’re entering an era of specialized accelerators. Training workloads have different requirements than inference. Real-time applications need different chips than batch processing. This deal acknowledges that a heterogeneous approach—multiple chip types working together—will define AI infrastructure going forward.

Pressure on Traditional GPU Dominance

While the Nvidia Groq chips deal strengthens Nvidia’s ecosystem position, it also validates the specialized accelerator market. AMD, Intel, and dozens of startups are watching closely. If Nvidia itself is diversifying beyond pure GPU solutions, that’s a powerful signal that the market is ready for alternatives.

This partnership could accelerate investment in companies building TPUs, NPUs, and other AI-specific processors. Performance-per-watt metrics are becoming as important as raw FLOPS. The deal validates this shift in industry priorities.

AI Chip Architecture Comparison

Feature | Nvidia GPU | Groq LPU | Combined Value |

|---|---|---|---|

Primary Use | Training + Inference | Inference Optimized | Full AI Pipeline |

Latency | Variable | Ultra-Low, Consistent | Optimized per Task |

Power Efficiency | High (improving) | Very High | Right-Sized Compute |

Ecosystem | CUDA Dominant | Growing Integration | Unified Platform |

Availability | Constrained | Scaling Up | Expanded Supply |

Opportunity for AI Chip Startups

Perhaps the most exciting implication is what this means for the broader AI chip startup ecosystem. The Nvidia Groq chips deal proves that partnership—not just competition—is a viable path to market. Companies like Cerebras, SambaNova, and Tenstorrent are surely taking notes.

This deal creates a template. Find a niche where you excel. Prove your value proposition. Position yourself as complementary rather than competitive. Then negotiate integration into the dominant ecosystem. The Nvidia Groq chips deal could spawn a dozen similar partnerships.

How Groq Benefits from the Nvidia Partnership

Validation at the Highest Level

For Groq, this partnership is nothing short of transformative. When the world’s most valuable chip company validates your technology through partnership, that credibility is priceless. The Nvidia Groq chips deal instantly elevates Groq from promising startup to proven enterprise player.

Investors, customers, and partners all take notice. This deal will likely trigger increased funding interest, easier sales conversations, and faster talent acquisition. Being chosen by Nvidia—when they could work with anyone—sends an unmistakable signal about Groq’s technology.

Faster Path to Enterprise Adoption

Enterprise sales cycles for AI infrastructure can stretch 18-24 months. Companies need to evaluate technology, run benchmarks, negotiate contracts, and navigate procurement. This partnership dramatically shortens Groq’s journey.

Through the Nvidia Groq chips deal, Groq gains access to Nvidia’s existing customer relationships. Enterprises already buying Nvidia solutions can now evaluate Groq with reduced friction. The Nvidia Groq chips deal essentially gives Groq a co-selling partner with unmatched reach.

Positioning as the Inference Specialist

The Nvidia Groq chips deal allows Groq to own a specific territory: AI inference. Rather than trying to be everything to everyone, Groq can now focus exclusively on being the best at running trained models. The Nvidia Groq chips deal gives Groq permission to specialize.

This positioning has enormous value. As AI models get larger and inference costs dominate total cost of ownership, demand for inference-optimized hardware will explode. The Nvidia Groq chips deal positions Groq perfectly to capture this growing market segment.

Implications for Cloud Providers and Enterprise Customers

More Choice in AI Infrastructure

If you’re running AI workloads in the cloud or on-premise, the Nvidia Groq chips deal is good news. More hardware options mean more competition, better pricing, and solutions tailored to specific needs. The Nvidia Groq chips deal breaks the effective monopoly on high-performance AI compute.

Cloud providers like AWS, Azure, and Google Cloud are already evaluating the implications of the Nvidia Groq chips deal. We could soon see Groq instances alongside Nvidia GPU instances in their catalogs. The Nvidia Groq chips deal expands the menu for AI infrastructure buyers.

Potential Cost and Performance Gains

The Nvidia Groq chips deal could translate directly into cost savings for enterprises. For inference-heavy workloads—which describe most production AI applications—using right-sized Groq hardware instead of general-purpose GPUs makes economic sense. Lower latency, predictable throughput, better efficiency.

Companies running chatbots, recommendation engines, or real-time AI features should pay close attention to the Nvidia Groq chips deal. These workloads might be perfectly suited for Groq’s architecture. The Nvidia Groq chips deal could mean doing more AI with less spend.

Complexity of Multi-Chip AI Stacks

There’s a catch, though. The Nvidia Groq chips deal introduces complexity. Managing heterogeneous hardware—GPUs for training, Groq for inference—requires sophisticated orchestration. Software stacks must be optimized for multiple architectures. The Nvidia Groq chips deal creates opportunities but also challenges.

This complexity actually strengthens Nvidia’s platform position. Their software orchestration capabilities become even more valuable in a multi-chip world. The Nvidia Groq chips deal, paradoxically, might make enterprises more dependent on Nvidia’s software even as they diversify hardware.

Editorial Analysis: What the Nvidia Groq Chips Deal Really Means

AI Compute Is Entering a Modular Era

Here’s my take on the Nvidia Groq chips deal. We’re witnessing the beginning of modular AI computing. Just as data centers evolved from monolithic mainframes to diverse server architectures, AI infrastructure is fragmenting into specialized components. The Nvidia Groq chips deal is an early indicator of this trend.

The future isn’t one chip to rule them all. It’s the right chip for each task. The Nvidia Groq chips deal embraces this reality. Training on GPUs. Inference on LPUs. Edge processing on NPUs. Each workload matched to optimal hardware. The Nvidia Groq chips deal is the opening chapter of this modular story.

Nvidia Is Acting Like an AI Operating System

The Nvidia Groq chips deal reveals Nvidia’s true ambition. They don’t just want to sell chips. They want to be the operating system for AI computing. Just as Microsoft Windows dominated PCs while supporting diverse hardware vendors, Nvidia aims to control the AI software layer while accommodating various chips.

Through deals like the Nvidia Groq chips deal, Nvidia strengthens its software platform. CUDA compatibility, NVLink connectivity, and orchestration tools become more valuable—not less—as hardware diversifies. The Nvidia Groq chips deal actually reinforces Nvidia’s moat rather than threatening it.

The Real Competition Is Throughput, Not FLOPS

The Nvidia Groq chips deal signals a shift in how we measure AI hardware performance. Raw FLOPS—floating point operations per second—has been the traditional benchmark. But what actually matters? Useful work completed per dollar and per watt. End-to-end throughput, not theoretical peaks.

Groq’s chips might have lower raw FLOPS than top Nvidia GPUs. But for inference workloads, they deliver faster results with less energy. The Nvidia Groq chips deal acknowledges that benchmark wars are over. Practical performance wins. The Nvidia Groq chips deal is fundamentally about pragmatism.

Risks and Open Questions About the Nvidia Groq Chips Deal

No analysis is complete without considering what could go wrong. The Nvidia Groq chips deal raises several important questions that remain unanswered.

Key Questions Surrounding the Deal:

- Pricing Power: Will third-party chips dilute Nvidia’s legendary profit margins? The Nvidia Groq chips deal could set precedents that affect Nvidia’s pricing strategy across all products.

- Manufacturing Scale: Can Groq ramp production fast enough? The Nvidia Groq chips deal only matters if Groq can deliver chips in volume. Manufacturing remains their biggest challenge.

- Ecosystem Openness: How open will Nvidia’s platform really be? The Nvidia Groq chips deal suggests openness, but Nvidia could still favor its own silicon when it matters most.

- Competitive Response: How will AMD, Intel, and other competitors react? The Nvidia Groq chips deal might spark a wave of similar partnerships or more aggressive competition.

- Customer Adoption: Will enterprises actually embrace multi-chip stacks? The Nvidia Groq chips deal only succeeds if customers see value in the added complexity.

These risks don’t invalidate the strategic logic of the Nvidia Groq chips deal. But they remind us that execution matters as much as vision. The Nvidia Groq chips deal is a promising beginning, not a guaranteed success.

What Comes Next: 2026 AI Hardware Outlook

Based on the Nvidia Groq chips deal and broader market trends, here’s what I expect to see over the next 12-18 months.

Predictions for 2026:

- More Hybrid Hardware Deals: The Nvidia Groq chips deal will inspire similar partnerships. Expect AMD, Intel, or major cloud providers to announce deals with AI chip startups.

- Inference Optimization Focus: As models mature, inference costs dominate. The Nvidia Groq chips deal reflects growing emphasis on running AI efficiently, not just training it.

- Multi-Accelerator Architectures: Cloud providers will offer mix-and-match AI infrastructure. The Nvidia Groq chips deal enables this heterogeneous future.

- Specialization Over Scale: The Nvidia Groq chips deal proves specialized chips can win. Competition shifts from raw performance to workload-specific optimization.

- Software Orchestration Becomes Critical: Managing diverse chips requires sophisticated software. Nvidia’s platform advantage, reinforced by the Nvidia Groq chips deal, becomes even more important.

The Nvidia Groq chips deal isn’t just a single transaction. It’s a signpost pointing toward where the entire industry is heading. Those who understand the Nvidia Groq chips deal’s implications will be better positioned for the next phase of AI infrastructure evolution.

Conclusion: The Big Picture on the Nvidia Groq Chips Deal

Let’s step back and see the forest, not just the trees. The Nvidia Groq chips deal reflects a maturing AI infrastructure market. We’ve moved beyond the phase where demand simply exceeded supply. Now, the industry is getting smarter about matching workloads to optimal hardware.

The Nvidia Groq chips deal shows that AI growth is no longer constrained by models alone—it’s constrained by compute architecture choices. Having the best model means nothing if you can’t run it efficiently at scale. The Nvidia Groq chips deal addresses this bottleneck.

Nvidia’s willingness to integrate alternative chips through the Nvidia Groq chips deal demonstrates confidence, not weakness. Only a market leader secure in its position would embrace potential competitors. The Nvidia Groq chips deal is Nvidia playing from a position of strength, expanding its ecosystem rather than defending a shrinking castle.

The future of AI hardware will be heterogeneous, specialized, and strategically orchestrated. The Nvidia Groq chips deal is the clearest indication yet that this future has arrived. Whether you’re building AI applications, investing in tech companies, or simply trying to understand where the industry is heading, the Nvidia Groq chips deal deserves your attention.

One deal. One partnership. One signal that everything about AI compute is about to change. That’s the Nvidia Groq chips deal.

Stay Informed: The Nvidia Groq chips deal is just the beginning. Subscribe to our newsletter for continued coverage of AI hardware developments, industry analysis, and strategic insights. The next chapter of the Nvidia Groq chips deal story is still being written—make sure you’re there to read it.

By:-

Animesh Sourav Kullu is an international tech correspondent and AI market analyst known for transforming complex, fast-moving AI developments into clear, deeply researched, high-trust journalism. With a unique ability to merge technical insight, business strategy, and global market impact, he covers the stories shaping the future of AI in the United States, India, and beyond. His reporting blends narrative depth, expert analysis, and original data to help readers understand not just what is happening in AI — but why it matters and where the world is heading next.