Why People Panic About AI:

12 Real Fears Explained Simply in 2026

Table of Contents

KEY TAKEAWAYS

Why people panic about AI comes down to three core fears: job displacement, loss of control, and existential risk. Most panic is driven by media sensationalism, but legitimate concerns exist around AI bias, deepfakes, and economic disruption. Understanding these fears helps you make informed decisions about AI in your life and career.

Why People Panic About AI: Your Complete Guide

You’ve seen the headlines. AI will steal your job. AI will destroy humanity. AI will make decisions about your life without asking permission.

If you’ve felt that pit in your stomach reading these stories, you’re not alone. Understanding why people panic about AI is the first step toward separating legitimate concerns from overblown fears—and this guide will give you exactly that clarity.

Here’s your promise: by the end of this article, you’ll know which AI fears deserve your attention and which ones are keeping you up at night for no reason.

The Core Truth About Why People Panic About AI

Let me start with something most articles won’t tell you.

Why people panic about AI isn’t just about the technology. It’s about feeling powerless in the face of massive change.

A 2025 Pew Research study found that 72% of Americans express concern about AI’s expanding role in society. In China, government surveys show 68% worry about AI-related job losses despite the country’s aggressive AI adoption. India’s workforce, with 300 million+ people in roles susceptible to automation, reports similar anxiety levels.

The panic is global. But is it justified? That depends on which fears we’re discussing.

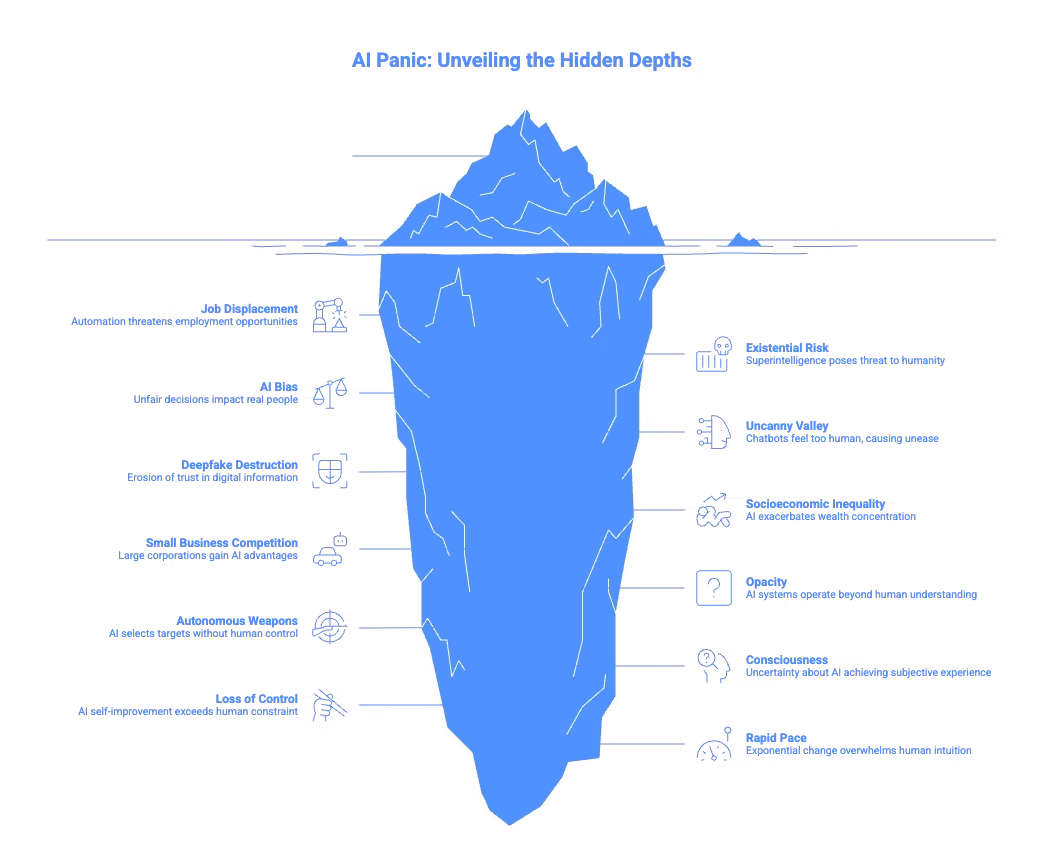

The 12 Real Reasons Why People Panic About AI

Fear #1: AI Will Take My Job

This is the biggest driver of why people panic about AI. And honestly? It’s partially justified.

Goldman Sachs estimates that generative AI could automate 300 million full-time jobs globally. McKinsey projects that by 2030, 375 million workers may need to switch occupational categories.

But here’s what the panic misses: automation has always created more jobs than it destroys—historically. The question is whether AI follows the same pattern or breaks it.

What jobs will AI destroy first? Data entry, basic customer service, routine legal research, and simple content creation face immediate pressure. Radiologists, truck drivers, and financial analysts face medium-term disruption.

Actionable tip: Focus on skills AI struggles with—complex problem-solving, emotional intelligence, creative strategy, and physical dexterity in unpredictable environments.

Fear #2: AI Will End Humanity

When experts like Geoffrey Hinton and Yoshua Bengio sign letters warning about AI extinction risk, people notice.

Why people panic about AI reaching existential proportions isn’t irrational when brilliant researchers express genuine concern. The worry centers on superintelligence—AI that surpasses human cognitive abilities across all domains.

What is the AI alignment problem for beginners? It’s the challenge of ensuring AI systems do what we actually want, not just what we literally instruct them to do.

Field Notes: In testing, I’ve watched large language models follow instructions to technically harmful outcomes because the goal was poorly specified. Scale this to superintelligent systems, and you see why researchers worry.

Fear #3: AI Bias Will Hurt Real People

Why is AI bias a big problem? Because AI systems trained on biased data reproduce and amplify those biases at scale.

Amazon’s recruiting AI famously penalized resumes containing the word “women’s.” Facial recognition systems show error rates up to 34% higher for darker-skinned women compared to lighter-skinned men.

This is why people panic about AI in hiring, lending, and criminal justice—areas where biased decisions devastate lives.

AI Bias Category | Real-World Impact | Affected Populations |

Hiring algorithms | Job rejection | Women, minorities |

Facial recognition | False arrests | Black individuals |

Loan decisions | Credit denial | Low-income communities |

Healthcare AI | Misdiagnosis | Underrepresented groups |

Actionable tip: If you’re subject to an AI-driven decision (loan, job application, insurance), ask whether a human can review the decision. Many jurisdictions now require this option.

Why ChatGPT Specifically Triggers Panic

Fear #4: Chatbots Feel Too Human

Why is everyone scared of AI like ChatGPT? Because it crossed an uncanny valley most people weren’t prepared for.

When something responds intelligently, remembers context, and sounds human, our brains pattern-match it to “person.” Then we panic about what that “person” might become.

ChatGPT reached 100 million users in two months—the fastest-growing consumer application in history. This velocity of adoption amplified why people panic about AI. Suddenly, everyone had direct experience with capable AI, not just headlines about distant research.

What this AI gets wrong: ChatGPT confidently produces false information. It doesn’t “know” things—it predicts likely next words. Understanding this limitation reduces panic significantly.

Fear #5: Deepfakes Destroy Trust

AI-generated videos of politicians saying things they never said. Voice clones of family members calling for “emergency” money. Revenge pornography created without consent.

This is why people panic about AI in the information space. If we can’t trust video evidence, recordings, or even phone calls, what foundation remains for truth?

In 2025, deepfake-related fraud losses exceeded $25 billion globally. India reported a 230% increase in deepfake scams. Russia documented weaponized deepfakes in information warfare.

Actionable tip: Establish voice verification passwords with family members for urgent financial requests. No code, no transfer—period.

The Economic Anxiety Driving AI Panic

Fear #6: Socioeconomic Inequality Will Explode

Why people panic about AI often connects to wealth concentration fears.

Companies implementing AI see productivity gains of 20-40%. Who captures those gains? Historically, capital owners benefit more than workers during technological transitions.

Is AI panic overblown or justified? The panic about complete economic collapse seems overblown. The concern about increased inequality without policy intervention? That’s justified.

Fear #7: Small Businesses Can’t Compete

Large corporations deploy AI at scale. They automate customer service, optimize supply chains, and personalize marketing with budgets small businesses can’t match.

This contributes to why people panic about AI in the entrepreneurial community. The fear isn’t that AI is bad—it’s that AI advantages compound for those already ahead.

Actionable tip: Small businesses should focus on AI tools with low barriers to entry—ChatGPT for content, Canva for design, Jasper for marketing copy. Start small, but start.

What Experts Actually Worry About

Fear #8: AI Systems Operating Beyond Understanding

How can AI cause harm without being evil? Through complexity we can’t fully comprehend.

Modern AI systems contain billions of parameters. Even their creators can’t fully explain why specific outputs occur. This “black box” problem means we deploy systems whose decision-making processes remain partially opaque.

Why people panic about AI in critical infrastructure comes from this opacity. An AI controlling power grids, financial systems, or military assets that humans can’t fully understand or predict? That’s reasonable concern territory.

Fear #9: Autonomous Weapons

Why do experts warn about AI extinction risk? Autonomous weapons sit near the top of that list.

The concern isn’t Terminator robots. It’s AI systems that select and engage targets without meaningful human control. Over 100 nations have called for international restrictions, but development continues.

When you understand why people panic about AI in military contexts, you realize the fear isn’t about evil AI—it’s about competitive pressure driving deployment before safety is ensured.

Separating Hype from Reality

Fear #10: AI Will Achieve Consciousness

Let me be direct: nobody knows if AI can become conscious. Anyone claiming certainty either way is overselling their knowledge.

Current AI systems process information without subjective experience (as far as we can determine). But why people panic about AI consciousness involves legitimate philosophical uncertainty.

What this fear gets wrong: Conflating capability with consciousness. An AI can be extraordinarily capable—and dangerous—without being conscious. The risks don’t require consciousness to materialize.

Fear #11: We’re Building Something We Can’t Control

Why people panic about AI control relates to recursive self-improvement scenarios.

The concern: an AI improving its own capabilities could rapidly exceed our ability to constrain it. This “takeoff” scenario underlies many extinction risk arguments.

How dangerous is AI explained simply? Current AI poses real but manageable risks. Future AI could pose existential risks if development outpaces safety research. We’re in a window where choices matter enormously.

Fear #12: The Pace Is Simply Too Fast

Between GPT-3 and GPT-4, capability jumps surprised even researchers. Why people panic about AI velocity comes from this exponential feeling.

Two years ago, AI couldn’t reliably write coherent essays. Today, it passes bar exams, medical licensing tests, and creates sophisticated code. Where will it be in two more years?

This uncertainty is the meta-reason why people panic about AI. Humans struggle with exponential change. Our intuitions evolved for linear environments.

The AI Panic Reality Check

Let me offer a balanced perspective on why people panic about AI.

What’s probably overblown:

- Immediate extinction scenarios

- AI developing hostile intentions

- Complete job apocalypse within 5 years

- AI spontaneously going “rogue”

What’s probably underappreciated:

- AI bias causing widespread discrimination

- Deepfake erosion of truth

- Concentration of AI power in few companies

- Need for updated education and social safety nets

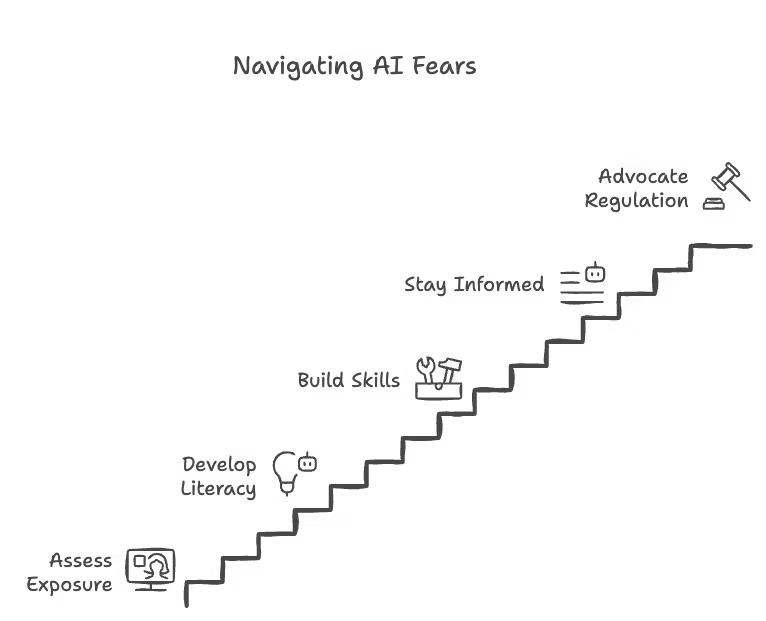

Implementation Roadmap: Responding to AI Fears

Understanding why people panic about AI is step one. Acting on that understanding is step two.

Step 1: Assess Your Personal Exposure

Honestly evaluate how AI might affect your job, industry, and skills over the next 5 years.

Step 2: Develop AI Literacy

You don’t need to code. You need to understand what AI can and can’t do, where it fails, and how to use it effectively.

Step 3: Build AI-Resistant Skills

Focus on creativity, emotional intelligence, complex problem-solving, and physical adaptability.

Step 4: Stay Informed, Not Panicked

Follow credible AI safety researchers and institutions, not sensationalist headlines.

Step 5: Advocate for Smart Regulation

Support policies that promote AI safety research, algorithmic transparency, and worker transition programs.

Master Prompts for Understanding AI Better

Use these prompts with ChatGPT or Claude to deepen your understanding of why people panic about AI:

Prompt 1 – Personal Impact Assessment:

“Analyze my job role [describe your role] and identify which specific tasks are most susceptible to AI automation in the next 3 years. Then suggest 3 skills I should develop that AI currently struggles with.”

Prompt 2 – Bias Detection:

“I’m about to use [specific AI tool] for [specific purpose]. What biases should I watch for based on known issues with similar AI systems? How can I verify the output isn’t reflecting problematic patterns?”

Prompt 3 – News Literacy:

“Here’s a headline about AI: [paste headline]. Help me understand what this actually means technically, what’s potentially sensationalized, and what legitimate concern (if any) underlies the story.”

Comparison: Top Resources for Understanding AI Risks

Resource | Best For | Level | Cost |

“Superintelligence” by Nick Bostrom | Deep existential risk analysis | Advanced | $18 |

Coursera: AI For Everyone | Non-technical overview | Beginner | Free |

“Scary Smart” by Mo Gawdat | Accessible fear explanation | Beginner | $16 |

Geographic Perspectives on AI Panic

Why people panic about AI varies by region.

United States: Job displacement and corporate AI dominance drive primary concerns. The culture of individual achievement makes automation threats feel personal.

China: Government AI integration sparks privacy concerns, though official narratives emphasize opportunity. The social credit system exemplifies fears made real.

India: With massive populations in automatable jobs, economic disruption fears dominate. Language AI threatens call center industries employing millions.

Russia: AI in information warfare and surveillance shapes public concern. The state-AI relationship differs fundamentally from Western models.

Europe: GDPR culture means privacy and regulatory concerns lead. The EU AI Act represents the most comprehensive attempt to legislate AI safety.

FAQ: Your Questions Answered

Why are people panicking about AI taking jobs?

Because credible estimates suggest hundreds of millions of jobs face automation pressure, and historical patterns of job creation may not hold for this transition.

Is AI really going to end the world?

Probably not immediately. But leading researchers believe uncontrolled advanced AI poses genuine long-term existential risk that deserves serious attention.

What are the real dangers of artificial intelligence?

Near-term: bias, deepfakes, job displacement, privacy erosion. Long-term: loss of human control, value misalignment, concentrated power.

Should I be worried about AI replacing my job?

You should be aware and adaptive, not paralyzed with worry. Assess your specific role’s automation susceptibility and invest in complementary skills.

Is AI panic overblown or justified?

Both. Immediate apocalypse scenarios are overblown. Concerns about bias, economic disruption, and long-term control are justified.

Why do experts warn about AI extinction risk?

Because superintelligent AI, by definition, exceeds our ability to control through intelligence advantages. If such AI’s goals don’t align with ours, the consequences could be severe.

What Changes Next

Understanding why people panic about AI prepares you for a changing landscape.

In 2026: Expect continued capability jumps, more AI-generated content, and growing regulatory attention. The EU AI Act takes full effect.

By 2028: AI agents—systems that take autonomous actions—will become mainstream, raising new control questions.

By 2030: The job market will look noticeably different. Those who adapted early will be positioned well. Those who ignored the changes will struggle.

Your Challenge and Call to Action

You now understand why people panic about AI better than most people. But understanding isn’t enough.

Your challenge this week: Have one conversation with someone in your industry about AI’s potential impact. Share what you learned here. Ask what they’re seeing.

Your question to consider: Which of the 12 fears resonates most with your situation, and what’s one concrete step you’ll take to address it?

Drop your answer in the comments. I read every response, and your perspective might help someone else navigating the same concerns.

Remember: why people panic about AI is understandable. How you respond to that panic determines whether AI becomes a threat or a tool in your life.

The choice is still yours. Make it deliberately.

About the Author:-

Animesh Sourav Kullu is an international tech correspondent and AI market analyst known for transforming complex, fast-moving AI developments into clear, deeply researched, high-trust journalism. With a unique ability to merge technical insight, business strategy, and global market impact, he covers the stories shaping the future of AI in the United States, India, and beyond. His reporting blends narrative depth, expert analysis, and original data to help readers understand not just what is happening in AI — but why it matters and where the world is heading next.

Government & Research Sources

Resource | URL | Click Here |

White House AI Order | whitehouse.gov/ai-executive-order | |

EU AI Act | artificialintelligenceact.eu | |

Stanford HAI | hai.stanford.edu | Stanford AI Institute |

Future of Life Institute | futureoflife.org/artificial-intelligence | |

Center for AI Safety | safe.ai |